Supercomputers: A tale of shovels and nuggets.

Who will be the leaders of the next phase of the AI theme? Insights on Tesla Dojo, Meta RSC and Cerebras Andromeda.

Check out my interview with Randy Kirk on the May 2023 CPI report here.

After years of teasing, TSLA 0.00%↑ formally announced their Dojo supercomputer at their August 19, 2021 AI Day. It was an exciting concept reveal that provided a roadmap for Tesla to become a true AI player. You can find Ganesh Venkataraman’s Dojo presentation here.

What then followed can be best described as radio silence. For a long time, it has been very difficult to get tangible information on Tesla’s progress with Dojo. To be honest, I have started suspecting it might be overpromising once again and as we are still waiting for the new roadster, for robotaxis and for Semi mass production, we would also have to wait forever for Dojo. Can’t take Elon’s words at face value, right?

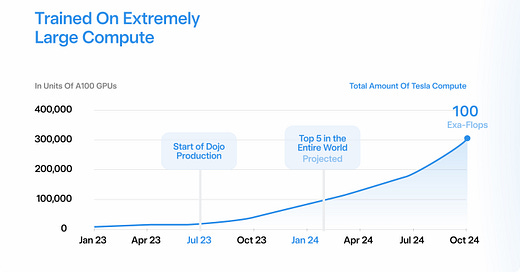

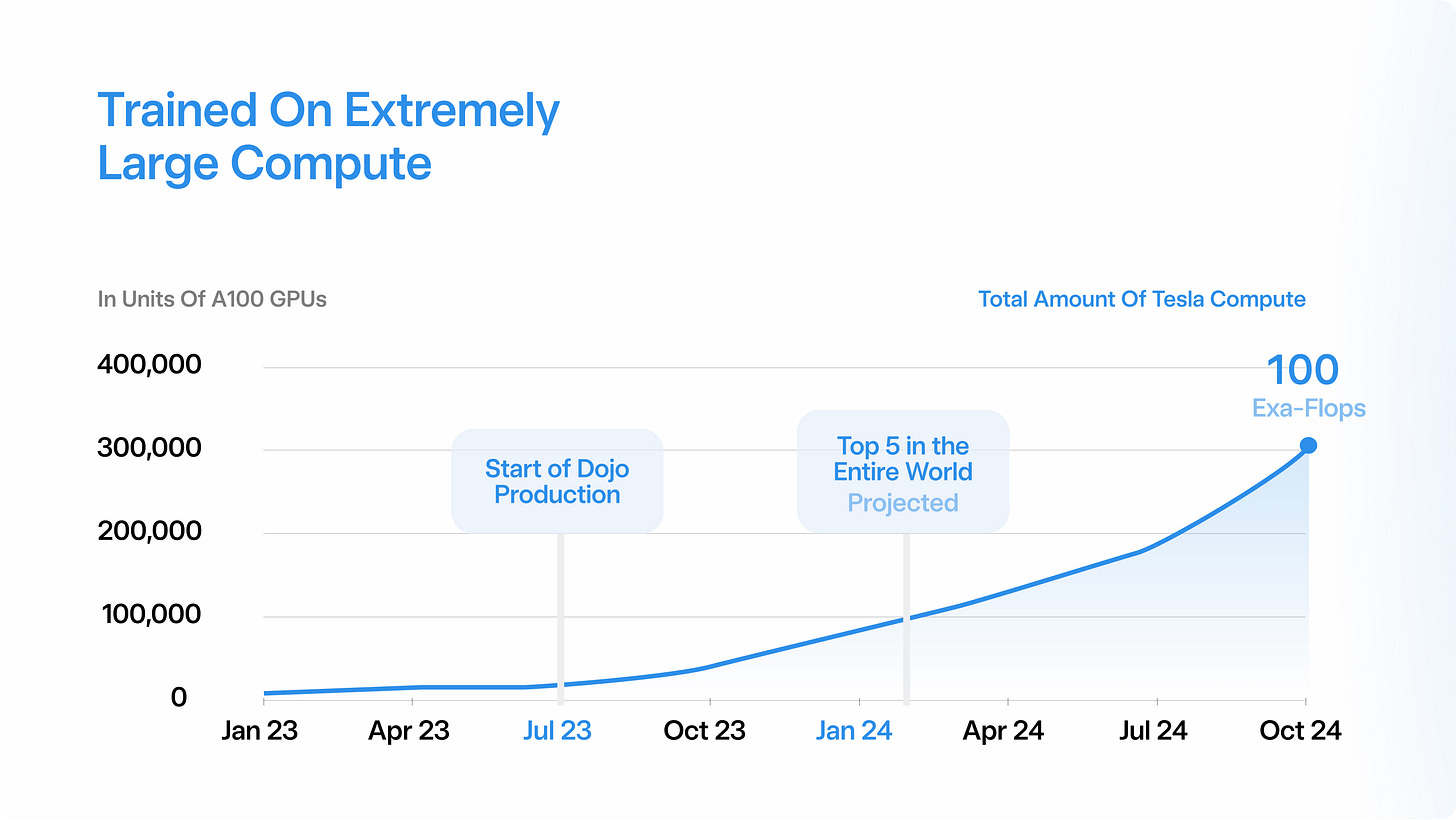

But something finally happened. They have created a new Twitter account, called Tesla AI. It entered the digital world yesterday with a big bang when it posted a thread presenting some of their current and future projects. One tweet stood out. Not just for me, but for many other Twitter users as well. It had about 40x more views than the other tweets: They published a roadmap for Dojo’s expected ramp.

Dojo will start production next month. By early next year they want to be in the Top 5 of all supercomputers in the world. By the end of next year they want to ramp that by another 100 (!) times. To be fair, this is still promising, not delivering. But it is very specific which gives it credibility in my view.

In this article, I will contextualize this information into the broader AI theme that is currently unfolding.

The profundity of ChatGPT

ChatGPT’s release on November 30, 2022 marked a historical turning point that will likely rival the iPhone reveal on January 9, 2007. Not because it is such a breakthrough product in and of itself. It’s still quite buggy and often more a toy than a tool. And it’s also not a brand-new invention. OpenAI had this technology since 2020. So, its historical relevance is not due to its functionality or novelty. It’s because it revealed the power of deep learning to the public for the first time at a large scale.

Just predict the next word

It’s amazing how it can imitate (or should I say replicate) human communication and comprehension with very simple statistical methods. And it’s even more amazing how close deep learning is to human learning. An LLM learns and communicates just like you and me. When we talk to each other, we don’t know the entire sentence or monologue we’re about to utter in advance. We speak one word at a time. And at the end of it, a profound thought or idea might have been born. ChatGPT sparks our imagination of what AI might be capable of soon with a human style of knowledge acquisition and distribution.

This has started a gold rush in the IT industry. The most prominent beneficiary has so far been NVDA 0.00%↑ which has quadrupled since its October 2022 low. And many others have followed in its slipstream. While Nvidia’s run in particular has been heavily scrutinized, I can certainly see the bull case subsequent to their latest earnings release.

I don’t have the tools though to build out this case with conviction. But there is definitely a fundamental story here considering their dominant GPU position and their relevance to the deep learning industry. What I do know is that Nvidia plans to primarily enable others to dig for gold, not dig themselves. Think of them like a prime AI supplier.

It is therefore not surprising that the stock is flying first. Markets don’t like uncertainty. They don’t know whether the AI hype will live up to its promises. But they can anticipate that many companies will dig in the coming years in a big FOMO rush. Hence, the obvious bet is longing the shovel seller. Or in this case even the seller of the shafts and the blades for the shovels.

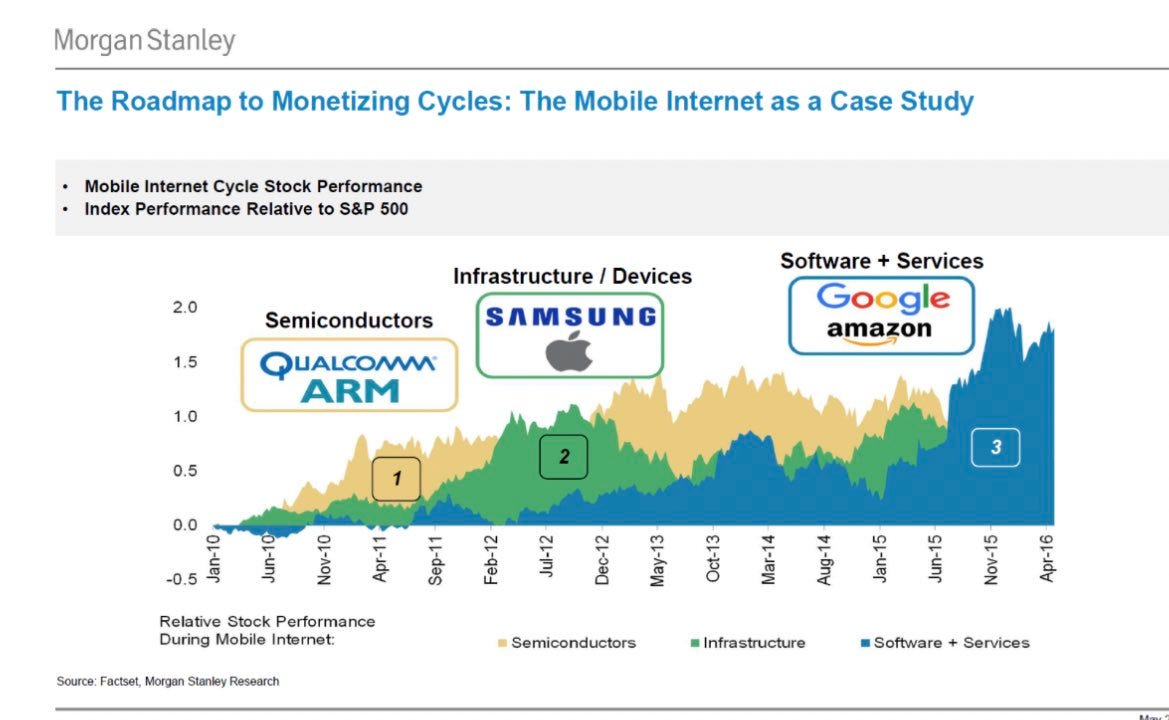

Such a sequence is not new to for innovation cycles. The 2010s mobile computing revolution also caused a spike in computing demand and chips were the first to benefit.

Historical precedents suggest that the first to monetize on a gold rush are those selling the equipment. They are the Enablers. If the gold rush is justified, the next to monetize on it are those using the shovels and the pans successfully to find gold. They are the Diggers. Next in line are those selling products and services based on the nuggets found by the Diggers. Think about goldsmiths for example. They are the Refiners. (It will take years before I care about those.)

I don’t know how much of the Enablers’ success is priced in. Presumably at least a good amount of it. The natural follow-up questions for me are then a) is there actually gold to find and b) who will be the first to find it. I will focus on the second question from here.

With mobile computing, you needed to bet on the winning devices, operating systems and later apps. What is the ultimate AI device? What is the 2020s equivalent for the 2010s handset?

Enter: Supercomputers

Dedicated Supercomputers vs. Cloud Solution

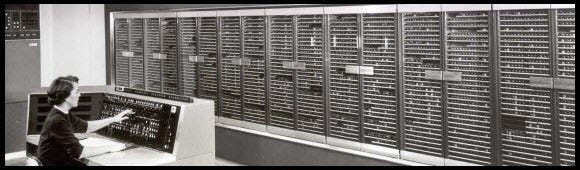

One of the defining features of deep learning is the enormous size of the underlying models and the computing power and capacity needed to train them. This will cause a revival of machine room sized computers that were common before the transistor was invented in 1947.

So far, most of deep learning is happening in the cloud. OpenAI trains ChatGPT on MSFT 0.00%↑ Azure which is running based on a decentralized force of Nvidia GPUs.

The reason for the revival of centralized on-premise supercomputers is the importance of linear scaling which is the holy grail in deep learning. You want two GPUs to perform twice as good as one. This is very difficult in traditional cloud set-ups. They are not designed to optimally triangulate compute, bandwidth and latency.

What are compute, bandwidth and latency?

Think about an ultralight singlespeed track bike for example. It is made for maximum performance in the velodrome where they often reach more than 80kph. But a track bike is not that versatile out in the wild. Perhaps you want to go into the mountains, so add some shifters and a cassette. And on gravel. Thicker tires. Longer distance. Comfier saddle and more upright position. Now you can go anywhere. But you won’t win the gold medal on the track.

Just like the ideal bike, the ideal computer system has to be optimized for its intended task along all relevant dimensions. It’s not just about how many calculations a system can hypothetically perform per time interval (top speed). It’s also about how much and how fast it can be provided with data. Achieving maximum performance is a lot about digital logistics, i.e. shifting bits and bytes around intelligently.

Using a restaurant as another analogy: If there are only burgers on the menu and there are 10 chefs in the kitchen all of whom are capable of making burgers, then the restaurant will work very efficiently and productively. But what if the restaurant also wants to be able to serve sushi? Then at least one of the 10 chefs needs to be an Itamae. And if there are no sushi orders at a given point in time, the Itamae will be idle and the restaurant productivity will be down.

Decentralized cloud computing will obviously still have its place and will grow massively. But there will be a new sub-industry of dedicated centralized on-premise supercomputers. That’s the most promising location for finding gold first (if there is any). A natural follow-up question follows from there.

Who has embraced this paradigm shift best?

Academic Operators

Supercomputers are typically ranked by the compute dimension only which is measured in floating point operations per second (FLOPS). By this metric, the currently leading system is the Frontier supercomputer that was developed by HPQ 0.00%↑ and is operated by the US Department of Energy. It operates at 1.2 exaflops and cost $600m to build. It is primarily used to solve science and research problems.

There are similar systems around the world, like the Fugaku in Japan or the LUMI in Finland for example. All of these have impressive specs, but I believe it is fair to say that their economic impacts have been limited so far. Commercial operators will take the centerstage in the next phase of supercomputing. While I believe it to be likely that we will soon see supercomputer announcements from the likes of Microsoft and Google, there are three other companies that stand out so far: Meta, Cerebras and Tesla.

Meta

META 0.00%↑ introduced their Research SuperCluster (RSC) on January 24, 2022. It is designed for a variety of tasks, including computer vision, speech recognition and natural language processing.

It’s a massive system that was apparently stacked together with off-the-shelf components and a great sense of urgency. Its estimate cost is $400m, $300m of which alone is for Nvidia GPUs. And that is just phase 1. Phase 2 is expected to treble. This would bring its compute to 5 exaflops.

Cerebras

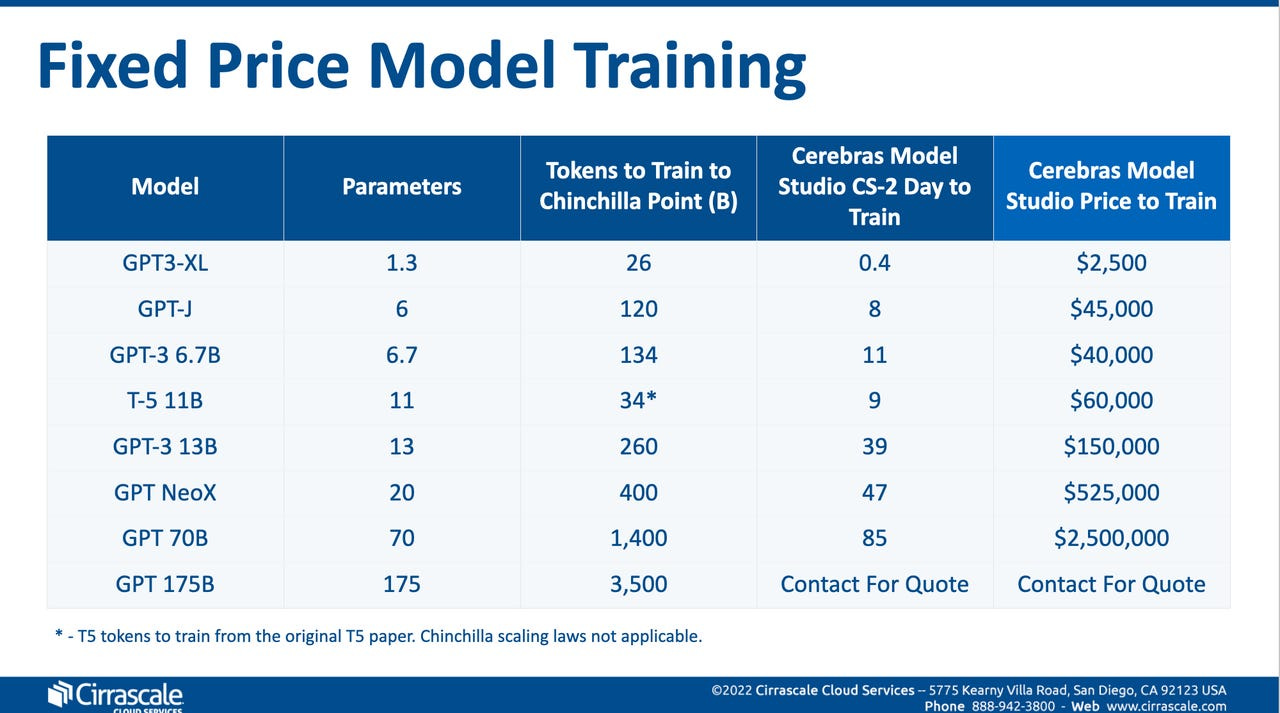

Cerebras is an AI supercomputing pure play that is currently in private equity hands. They have developed Andromeda which is currently one of the largest AI computers ever built with 1 exaflop compute. It’s difficult to learn about this company outside-in because it is not publicly listed. But we can use them to peek into the future of supercomputing-as-a-service by looking at their product offering.

They position themselves as an antagonist to traditional cloud computing. With their purpose-built computer system, they can allegedly train an LLM 8 times faster than a traditional cloud service and deliver substantial cost savings.

They typically get paid per training completed. One training can easily cost millions of Dollars.

Assuming that tailor-made AI models will be ubiquitous in the future, it is not hard to imagine that this will become an enormously financially rewarding business model. I will take notice when they go public in a few years. It might serve as an important sentiment signal.

Tesla

I remember that I found the first Dojo presentation exciting. But with hindsight I don’t think I fully comprehended the importance of that moment. There was a lot of shareholder signal in that presentation.

Firstly, it demonstrated that Tesla understood the relevance and value of having a proprietary on-premise supercomputer years before ChatGPT was developed and introduced and then they placed their bets accordingly. It will potentially be as significant as their grid scale energy storage project in Hornsdale, Australia. Back then, bears ridiculed them for that project arguing they did nothing more than stacking third party batteries together. But in truth, this laid the foundation for Tesla Energy which will soon be a $100bn business.

Secondly, it’s a testimony to their build vs. buy philosophy that has often created huge shareholder value before. Tesla rarely does M&A and they have built their own enterprise software for example. Where Meta simply goes shopping for a $1bn system, Tesla builds a system from scratch that exactly fits their needs and that effort builds inhouse expertise as a byproduct.

This brings me to the third point: A computer system will always have to be built with trade-offs along the dimensions mentioned above. Performance and flexibility correlate negatively. What intrigued me most about their presentation was how they embraced that fact. Dojo is designed for a very specific task: Enable AI to perceive the physical world and navigate therein by solely using computer vision. Here is Ganesh Venkataraman’s key quote:

“One thing which is common trend amongst [computing networks] is it's easy to scale the compute, it's very difficult to scale up bandwidth and extremely difficult to reduce latencies. And you'll see how our design point catered to that.”

Ganesh Venkataramanan, 2021 AI Day

In his 20min presentation, he mentioned bandwidth 29 times. They are hyperfocused on linear scaling for the specific task they have in mind.

As outlined above, Dojo is expected to start production next month. But Elon pointed out yesterday that it has actually been online for a few months already.

It would be an amazing achievement if they truly manage to ramp this to 100x the compute of the current top 5 supercomputer next year. The y-axis of that chart suggests that this would then be equivalent of 300,000 Nvidia A100 GPUs. If I’m getting this right (and there is a chance I’m not because I’m far from being an expert in this field), then this would be equivalent to the performance of at least a $3bn computer system. An A100 costs $10,000 and presumably there are additional components required to stack 300,000 of them together. We don’t know how much Dojo has cost, but looking at their capex spending, it can’t be more than fractions of that.

Such a successful Dojo ramp would be a leap forward for Tesla’s FSD program. It would grant credibility to their humanoid bot program. And it would spark optimism about Dojo potentially becoming a business unit on its that can provide AI outsourcing services for other companies eventually.

Sincerely,

Your Fallacy Alarm

While having a built for purpose machine for training is a huge advantage, for me another major advantage of dojo is owning the machine as capex as opposed to renting it via oppex. Getting FSD working is going to take more than a big machine and a bunch of data. They’re going to need countless iterations tweaking the training algorithms and then subsequent also computationally expensive simulations to test the viability of the trained models on a plethora of situations. If I’m having to shell out millions for each training and testing cycle I’m going to be a whole lot less iterative/agile than if it’s just the cost of electricity. In an unknown space like this I’d way rather take a try out as many things as possible approach than a justify to the board my ongoing 400m oppex spend world.

great take, thank you!